COCO-Tasks Dataset

Coco-Tasks

When humans have to solve everyday tasks, they simply pick the objects that are most suitable. While the question which object should one use for a specific task sounds trivial for humans, it is very difficult to answer for robots or other autonomous systems. This issue, however, is not addressed by current benchmarks for object detection that focus on detecting object categories. We therefore introduce the COCO-Tasks dataset which comprises about 40,000 images where the most suitable objects for 14 tasks have been annotated.

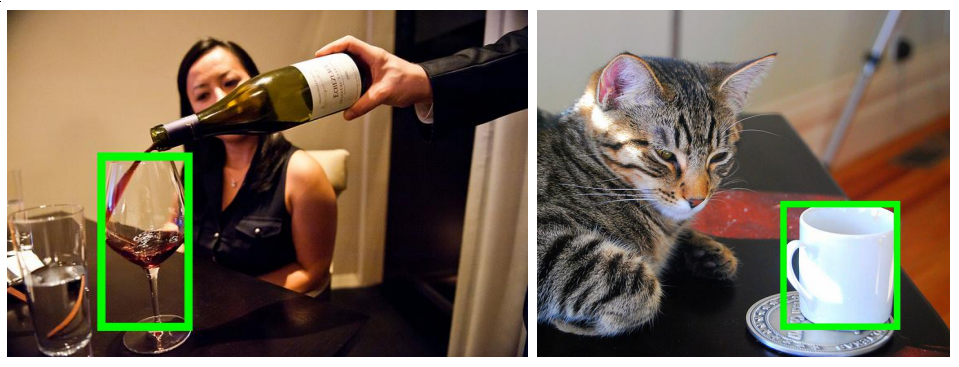

What object in the scene would a human choose to serve wine?

In the left image, the wine glass is preferred to other drinking glasses. In the right image, neither a wine glass nor other drinking glasses are present. The cup is therefore chosen by the human.

Download

We are providing the dataset for academic use, in the same format as COCO dataset. This means that you can directly use the COCO API to read the annotation of our dataset. Furthermore the annotation tool used to annotate our dataset is also open-sourced.

Dataset Annotation

Annotation Tool

Team

Paper

The COCO-Tasks dataset was introduced in the following CVPR 2019 paper. If you use the dataset, please cite our paper.

@inproceedings{cocotasks2019,

Author = {Sawatzky, Johann and Souri, Yaser and Grund, Christian and Gall, Juergen},

Title = {{What Object Should I Use? - Task Driven Object Detection}},

Booktitle = {{CVPR}},

Year = {2019}

}

Johann Sawatzky

Johann Sawatzky

Yaser Souri

Yaser Souri

Christian Grund

Christian Grund

Juergen Gall

Juergen Gall